Initial release version 1.0 April 2018

You can download release 1.0 of UHD Phase B Guidelines from this link: Ultra-HD-Forum-Phase-B-Guidelines-v1.0

- The Ultra HD Forum produces Guidelines to help the industry navigate the complex UHD landscape

- The Ultra HD Forum focuses on end-to-end workflows from camera to consumer

- UHD Phase A Guidelines describe end-to-end processes for creating a linear UHD service using technologies that were commercially available by 2016

- The fourth version of the UHD Phase A Guidelines was released in September 2017

- UHD Phase B Guidelines introduce and de-mystify next generation UHD technologies that operators are exploring for future enhanced UHD services.

UHD Phase B Technology Selection Criteria

- The technology must be proven to be functional in an end-to-end workflow that is within scope of the Guidelines document (or that portion of the workflow that pertains to the technology), either via early deployment or via interop testing to members’ satisfaction – AND

- At least 2 service providers (or 1 major provider) demonstrate interest in the technology; service providers do not need to be members of the UHDF, and their support may be demonstrated, for example, by written confirmation to the UHDF working group, or by verifiable products in production that support the technology, etc.

UHD Phase B Technologies

- Dynamic HDR metadata systems, including Dolby Vision™ and SL-HDR1

- Dual layer HDR technologies

- High frame rate

- Next generation audio including Dolby® AC-4 and MPEG-H audio

- Content-aware encoding

- AVS2 video codec (pending public availability of the English language spec)

Dynamic HDR Metadata

- UHD Phase A Guidelines described use of PQ10, HDR10 (i.e., PQ10 with static metadata) and HLG

- UHD Phase B Guidelines describes two dynamic metadata systems: Dolby Vision and SL-HDR

Dolby Vision

- Dolby Vision: an ecosystem solution that utilizes dynamic HDR metadata to:

- create, distribute and render HDR content, and

- preserve artistic intent across a wide variety of distribution systems and displays

- Encoding/Decoding Overview

- Color Volume Mapping (Display Management)

- ICTCP in the HDR workflow

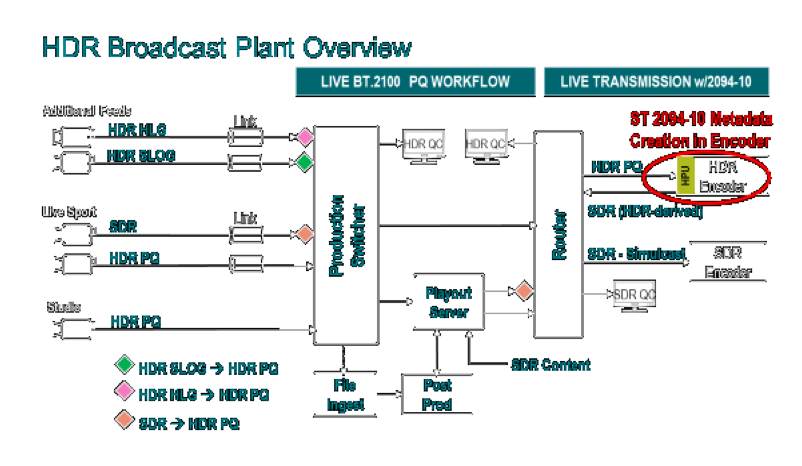

- Use of Dolby Vision in Terrestrial Broadcast

- Standards in place and in development

SL-HDR

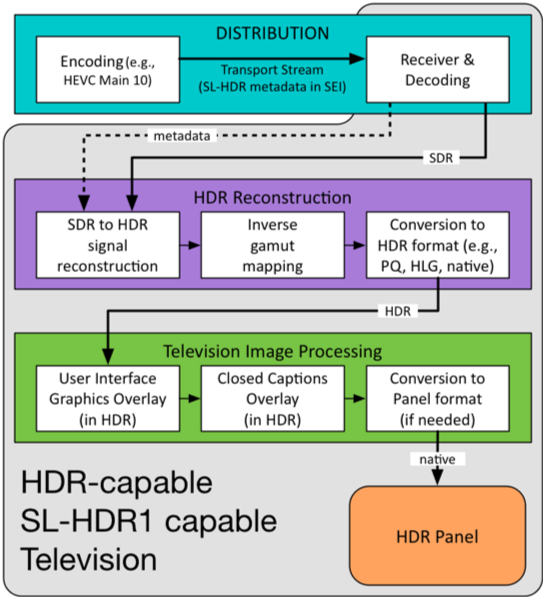

- SL-HDR is a method of down-conversion to derive an SDR/BT.709 signal from an HDR/WCG signal

- SL-HDR dynamic HDR metadata contains the necessary information to reconstruct the HDR/WCG signal

- SL-HDR supports PQ, HLG, and other HDR/WCG formats

- Description of deriving SDR/709 from HDR/WCG content and carriage of SL-HDR dynamic HDR metadata

- Including SHVC use cases

- Description of B2C use cases:

- SL-HDR1-capable HDR display

- HDR-capable display connected to SL-HDR-capable decoder (e.g., STB)

- SDR display

- Uses of SL-HDR in a B2B workflow (e.g., network to affiliate stations)

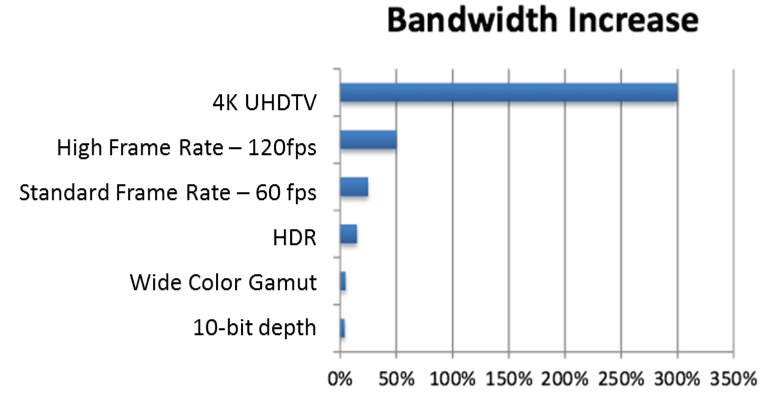

HFR efficiently improves viewer experience

- Defined as 100, 120/1.001 and 120 FPS

- 120/1.001 is included; however, the Ultra HD Forum recommends integer frame rates

- Description of HFR in ATSC and DVB standards

- Temporal Scalability for backward compatibility described

- Temporal Filtering for improving the 60fps backward compatible version

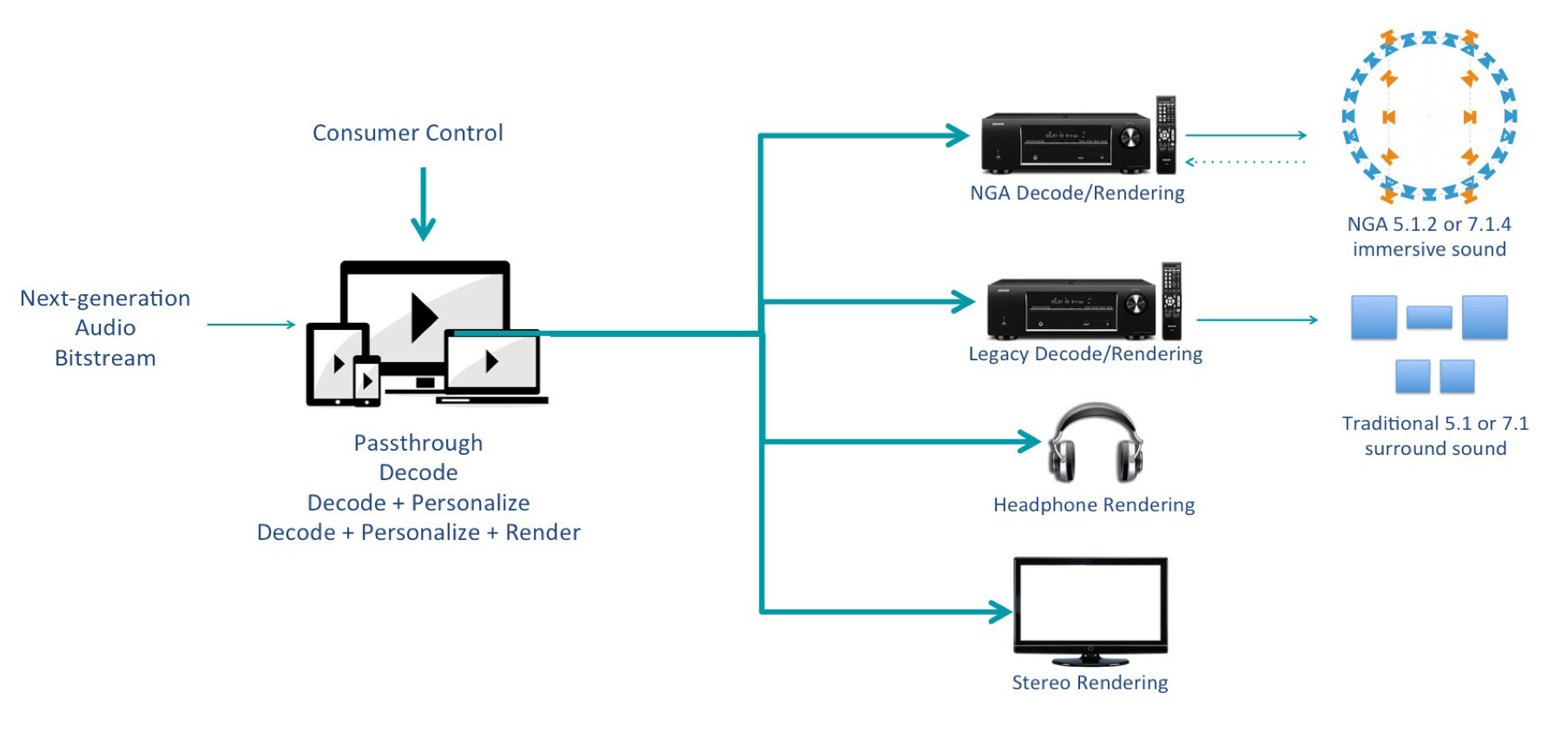

Next Generation Audio

- NGA compliments and completes the UHD visual experience; new features include:

- Immersive 3-dimensional sound

- Personalization capabilities

- Consistent playback on different speaker configurations including headphones

NGA in the UHD Phase B Guidelines

- Excellent “roadmap” to common NGA terms and definitions

- Special thanks to ATSC for their work in this area; see ATSC: A/342 Part 1, Audio Common Elements at www.atsc.org

- Channel-based, Object-based and Scene-based (aka HOA) audio concepts explained

- Components and Preselections

- How preselections are comprised of components for efficient delivery of audio choices for consumers

- UHD Phase B Guidelines details Dolby AC-4 and MPEG-H Audio

- Including extensive description of the systems’ metadata: how it’s used and what it’s good for

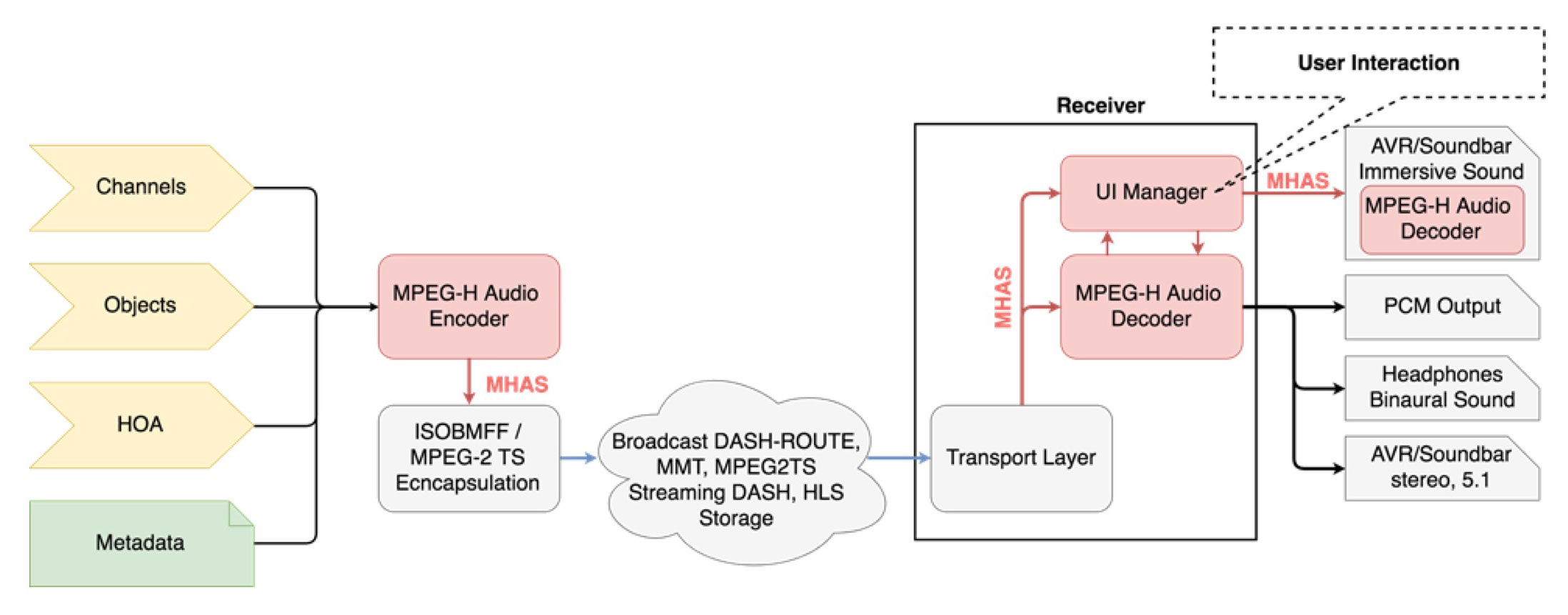

MPEG-H Audio

- Efficiently carries any combination of Channel-, Object- and Scene-based signals

- Includes Higher Order Ambisonics (HOA), a scene-based signal

- each produced signal channel is part of an overall description of the entire sound scene, independent of the number and locations of loudspeakers

- Metadata enables rendering, advanced loudness control, personalization and interactivity, dialog enhancement, etc.

- UHD Phase B includes a rich description of all facets of MPEG-H audio, including metadata details

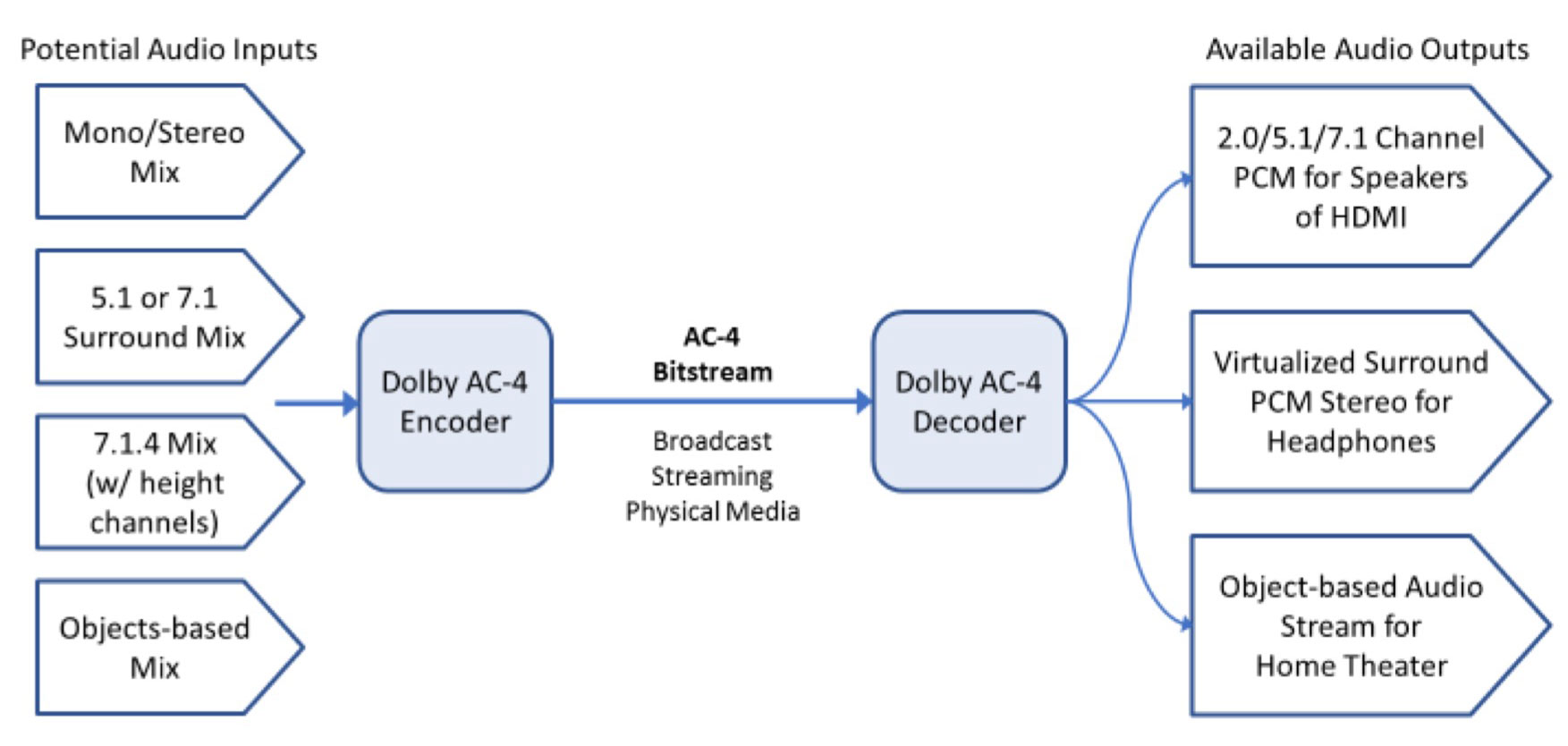

Dolby(R) AC-4 Audio

- Efficiently carries Channel-based and Object-based audio elements, or a combination of the two

- AC-4 decoders combine the audio elements to tailor the output to the consumer’s set-up

- UHD Phase B explains key features including:

- Core vs. Full Decode

- Sampling Rate Scalable Decoding

- Bitstream Splicing

- Support for Separated Elements

- Video Frame Synchronous Coding

- Dialog Enhancement

Content Aware Encoding

- Content Aware Encoding or Content-Adaptive Encoding (CAE) is a class of techniques for improving coding efficiency

- Intelligently exploits properties of the content to reduce bitrate

- “Simple” content, such as scenes with little motion, static images, etc. is encoded using fewer bits

- “Complex” content, such as high-motion scenes, waterfalls, etc. is encoded using the necessary bits for quality reproduction

- Since “simple” content is prevalent, the use of CAE techniques can result in significant bandwidth savings

You can download release 1.0 of UHD Phase B Guidelines from this link: Ultra-HD-Forum-Phase-B-Guidelines-v1.0